- #Webscraper chrome select images how to#

- #Webscraper chrome select images install#

- #Webscraper chrome select images driver#

- #Webscraper chrome select images code#

Options.add_argument("start-maximized") # ensure window is full-screenĭriver = webdriver.Chrome(options=options)Īdditionally, when web-scraping we don't need to render images, which is a slow and intensive process. Options.add_argument("-window-size=1920,1080") # set window size to native GUI size In Selenium, we can enable it through the options keyword argument: from selenium import webdriverįrom import Options However, often when web-scraping we don't want to have our screen be taken up with all the GUI elements, for this we can use something called headless mode which strips the browser of all GUI elements and lets it run silently in the background. If we run this script, we'll see a browser window open up and take us our twitch URL.

#Webscraper chrome select images code#

To start with our scraper code let's create a selenium webdriver object and launch a Chrome browser: from selenium import webdriver

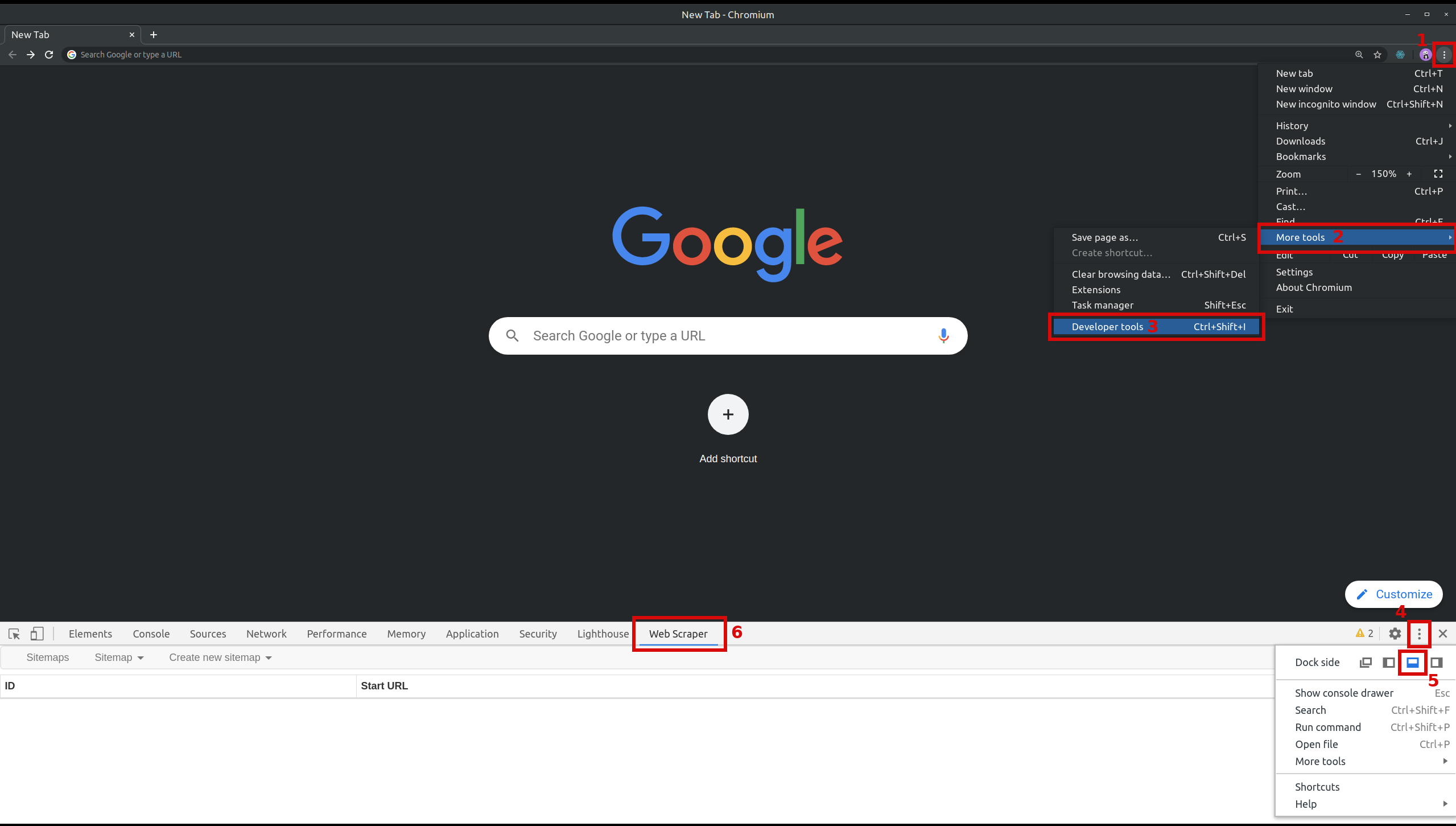

#Webscraper chrome select images install#

#Webscraper chrome select images driver#

1× Selenium driver in ipython demonstration We recommend either Firefox and Chrome browsers: However, we also need webdriver-enabled browsers. Selenium webdriver for python can be installed through pip command: $ pip install selenium Selenium webdriver translates our python client's commands to something a web browser can understandĬurrently, it's one of two available protocols for web browser automation (the other being Chrome Devtools Protocol) and while it's an older protocol it's still capable and perfectly viable for web scraping - let's take a look at what can it do! Installing Selenium Webdriver is the first browser automation protocol designed by the W3C organization, and it's essentially a middleware protocol service that sits between the client and the browser, translating client commands to web browser actions. This tool is quite widespread and is capable of automating different browsers like Chrome, Firefox, Opera and even Internet Explorer through middleware controlled called Selenium webdriver. Selenium was initially a tool created to test a website's behavior, but it quickly became a general web browser automation tool used in web-scraping and other automation tasks. We'll cover some general tips and tricks and common challenges and wrap it all up with an example project by scraping In this Selenium with Python tutorial, we'll take a look at what Selenium is its common functions used in web scraping dynamic pages and web applications.

#Webscraper chrome select images how to#

We've already briefly covered 3 available tools Playwright, Puppeteer and Selenium in our overview article How to Scrape Dynamic Websites Using Headless Web Browsers, and in this one, we'll dig a bit deeper into understanding Selenium - the most popular browser automation toolkit out there. Additionally, it's often used to avoid web scraper blocking as real browsers tend to blend in with the crowd easier than raw HTTP requests. Traditional web scrapers in python cannot execute javascript, meaning they struggle with dynamic web pages, and this is where Selenium - a browser automation toolkit - comes in handy!īrowser automation is frequently used in web-scraping to utilize browser rendering power to access dynamic content. The modern web is becoming increasingly complex and reliant on Javascript, which makes traditional web scraping difficult.

0 kommentar(er)

0 kommentar(er)